CX leaders are bombarded with AI solutions — but how many actually deliver real value?

Every CX vendor claims to be ‘AI-powered’ — but how much of it is just marketing fluff?

In this guide, we’ll cut through the noise. First, we’ll examine the AI marketplace (including new developments like DeepSeek) and separate strengths from hype. Then, we’ll show how top CX teams are actually using AI to save time, improve experiences, and prove the value of CX. Along the way, you’ll develop a deeper understanding of how modern AI really works—so you can make smarter decisions anywhere AI touches your role.

This article is going to cover a lot of ground, and by the end of it you’ll be able to make informed decisions about how to use AI in your area of responsibility.

As we already discussed, there are a lot of grand claims made about the future of AI, but we are going to focus on what’s working well in the here-and-now. What the pragmatic teams who we work with are seeing value in today. If grander claims, like multi-channel ai-based personalisation become commonly operationalised, we’ll update this guide.

You can of course skip to the good bit, but you’ll be more informed if you take a minute to catch up on a bit of history, and understand the strengths and weaknesses of the tech that underpins modern AI, before we look at specific applications of this tech.

AI/ML (Machine Learning) has been around a long time. But practical business uses started to become more commonplace from around 2015 to 2020 when firms like Google and Amazon made available high quality, inexpensive NLP (Natural Language Processing) tools which could detect customer sentiment in feedback, and pull out key topics and themes.

These NLP tools were good, although not as impressive as the ones we enjoy today, and were quickly integrated into CX insight platforms like CustomerSure, becoming ‘table stakes’ for serious feedback analysis.

However, a watershed moment for AI came with the public release of ChatGPT in November 2022. Attracting 100 million users in just two months, ChatGPT is arguably the fastest-adopted piece of technology ever.

But ChatGPT is just a chatbot ‘wrapper’ around OpenAI’s core ‘GPT’ model. This was initially GPT 3.5, but the more recent GPT 4, GPT-4o and o1 rapidly added new features and improved GPT’s already-impressive capabilities.

Most people know GPT for text generation: Chatbots, reports, code, and web content. But ironically, even ChatGPT itself doesn’t claim this is its best use case, instead hedging that it’s best used for a range of tasks, like tutoring, editing human-written text, and creative brainstorming.

One other thing that GPT is good at? customer feedback analysis, including sentiment analysis and topic detection.

GPT is just one example of a Large Language Model (LLM). Although ChatGPT was the line in the sand which resulted in mass-awareness of the power of AI, it’s far from the only LLM in town.

Many, many other LLMs exist, from commercial offerings like Google’s Gemini, Anthropic’s Claude, or freely-available products like Facebook’s Llama. These LLMs are all comparably powerful and can be used to accomplish similar tasks.

In 2023, an internal Google memo, “We have no moat” famously leaked. The thrust of the memo is that without a ‘killer’ product wrapped around the core LLM model, any company developing its own AI technology has a rocky commercial future, because other research groups are giving away equally-powerful tools for free.

This memo turned out to be spot-on when, over Christmas 2024, DeepSeek made their V3 LLM freely available.

DeepSeek outperforms GPT on a number of benchmarks and does so at a significantly lower processing cost, meaning it’s now feasible for any company to operate their own GPT-4o-equivalent model, on their own private servers, and do so more cost-effectively than paying OpenAI.

This means CX teams now have real choices: They can run their own completely private LLMs (subject to IT resources and infrastructure), reducing data privacy issues and simplifying software subscriptions.

For some teams, this could mean significant cost savings and fewer data governance headaches compared to commercial LLMs. A CX platform (that’s us!) should be equally happy working with a team’s private LLM as they are using best-in-class commercial solutions like GPT-4o and Claude

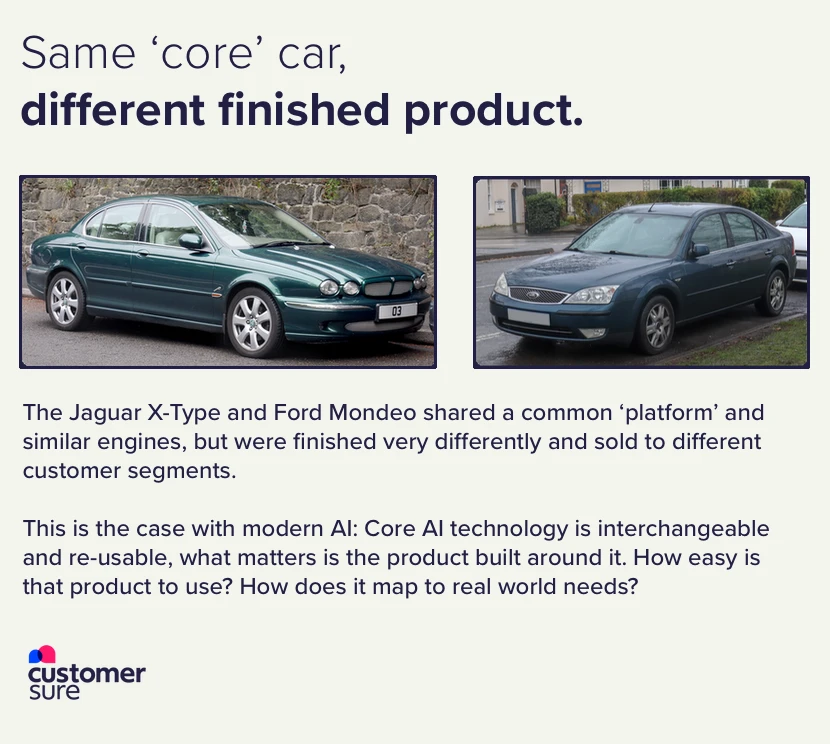

Like other breakthroughs which have powered historic economic transformations, like coal, electricity and computer networks, AI ‘engines’ are now a commodity: Cheaply and widely available. But just like these historical commodities, it’s possible to build wildly-different products using the same building blocks.

If no AI provider has a ‘secret sauce’ which gives them a competitive edge, then how do you choose? And how do you get the best results? It first helps to get a good understanding of how to get good results from a LLM.

(We’ll skate over the details of exactly how LLMs perform their magic, but keen readers might enjoy this blog post which explains a lot of the key concepts without reaching for too much maths or jargon).

If you ask ChatGPT to analyse customer feedback, it will do an adequate job, probably better than what off-the-shelf tools in 2017 would manage. But of course, ‘adequate’ isn’t the goal.

When platforms like CustomerSure use a LLM for customer feedback analysis, they add a number of ‘layers’ on top of the raw LLM to produce higher-quality results. In short, these layers are:

LLMs provide better responses when they have more context on a given domain (i.e. your business). So a key step is providing the LLM with a lot of background and context on who you are, how you make your money, how you’re structured, and what your existing customers say about you.

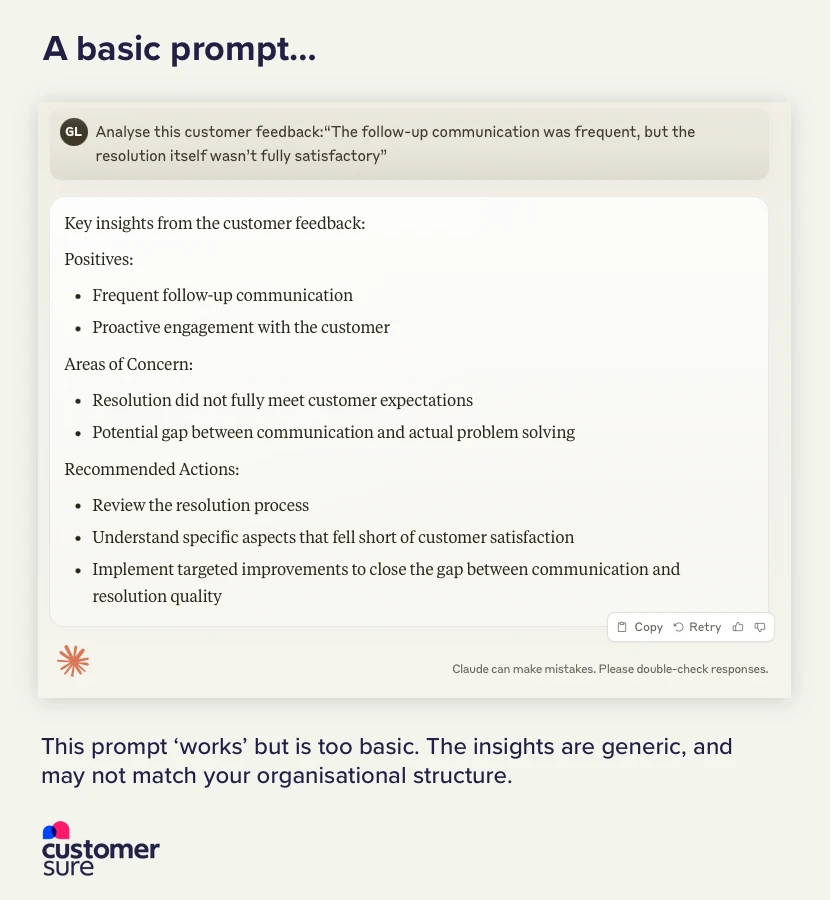

The ‘prompt’ to a LLM is the text that you send to the AI ‘core’ which causes it to ‘think’ and send you a response. For example,

Analyse the following customer feedback:

The follow-up communication was frequent, but the resolution itself wasn’t fully satisfactory

This basic prompt works as expected. But how useful is it?

To get more accurate, more actionable results, you need a high-quality prompt. Anthropic run a great interactive course in prompt engineering which will give you an insight into what goes into writing a good prompt. Suffice to say, the more effort and experience which goes into the prompt, the higher-quality the analysis will be.

Finally, it’s important to always be aware that although they’re intimidatingly powerful, LLMs will tell lies. They will simply occasionally say things which aren’t true, so this inevitably applies to feedback analysis carried out by a LLM.

There’s no guaranteed defence against this, it’s a problem that’s baked-in to how the technology works. But it’s a problem that can be mitigated through techniques like recursive critique, where instead of accepting the first answer given by a LLM, you subject that answer to further scrutiny before you accept it.

Think of it like a writer revising an article. Instead of publishing the first draft, they re-read it, check for errors, and refine weak points before publishing.

LLMs work by receiving text (the ‘prompt’), and by turning that text into numbers and doing some very fancy maths, deciding which text to send back in response to that prompt.

An ‘out-the-box’ LLM will do an acceptable job of classifying customer feedback, but a customer experience management platform can improve the quality and accuracy of that analysis through a combination of fine tuning, prompt engineering and recursive critique.

Finally, analysis accuracy is only half the story. GPT-4o might get it right 95% of the time, versus a ‘trained’ LLM getting it right 99.9% of the time, but neither will add to your bottom line unless it’s easy to reach insights from that analysis, and put those insights into the hands of teams empowered to improve things for customers.

Nothing in life comes for free. LLMs are shockingly flexible, powerful tools, great at feedback analysis… But to make best use of them, it’s important to understand where they fall short.

We’ll also cover a few areas where the public perception of LLMs (often formed from consumer interactions with ChatGPT in 2022) has not kept pace with the technical reality in 2025.

Yes. LLMs are trained on vast amounts of data written by humans, and then further fine-tuned by humans to give ‘desirable’ outputs based on that data.

The outputs of any LLM are biased based on the biases of these inputs.

How this affects your use of a LLM depends on how you’re using it.

“Not bad at all.”

🇬🇧: “Actually quite good.” (Positive)

🇺🇸: More neutral or even slightly negative, implying it’s just acceptable. (Neutral/Negative)

“Quite good, but could be better.”

🇬🇧: “Moderately good, but not great.” (Neutral/Negative)

🇺🇸: Sounds more like “very good.” (Neutral/Positive)

“Thanks for sorting that out. Cheers.”

🇬🇧: “Cheers” is fairly routine in the UK. (Weak positive)

🇺🇸: “Cheers” is often associated with celebration or strong approval. (Strong positive)

As you can see, these biases tend to be edge cases, but they do exist.

Not necessarily. LLMs are probability-based, which means they can give different outputs for the same input. And in ultra-rare edge cases, those outputs won’t even necessarily make sense. However, you need to bear in mind two key considerations:

As with everything we’ve discussed so far, once you’re aware of how the underlying tools work, you (or your CX platform partner) understand how to use techniques like prompt engineering and recursive critique to get the best results.

The honest answer is, it’s complicated.

The worst-case scenarios which circulate on social media about LLM energy consumption are rarely entirely accurate. Whilst training models, and servicing requests does consume a large amount of power, efficiency is rapidly increasing.

Consider that both OpenAI and Anthropic have approximately halved their per-token pricing between 2023 and 2025 because it costs them less energy to serve each request.

Or consider how much more cost-effective DeepSeek is than both. This 10x decrease in cost-per-token is largely due to a huge increase in power efficiency.

Or read this hugely in-depth research into the power consumption of AI which reports that modern Nvidia GPUs are 25x more power-efficient than the previous generation.

None of this means that LLMs are so absurdly energy-efficient to run that we shouldn’t care about their power consumption. It just means that the energy efficiency story in 2025 is vastly different to how it was reported in 2022.

Combined with the fact that much of this new power demand is being met by investments from datacentre builders into renewables (see the Bloomberg report for more on this), it means that although every company needs to carefully consider their carbon footprint, AI isn’t uniquely bad: It’s simply another energy cost that needs to be budgeted for, minimised, and offset if necessary.

If you’re doing it right, no.

It’s good that we live in a world which is more aware of data security than ever, but this particular concern feels rooted in consumer experimentation with ChatGPT, rather than business-grade use of LLM tools.

If a consumer uses the web version of ChatGPT, they by-default opt in to having their data used to improve future GPTs. So under no circumstances should you experiment with using ChatGPT like this to analyse customer data.

However for commercial GPT-4o use, data is not used for training. Google do not use your data to train Gemini. Anthropic (mainly) do not use your data to train Claude.

This doesn’t mean we should become careless about personal data. It’s important that we work within the framework established by GDPR to send surveys and process customer data, which means:

No AI system — from classical NLP systems to modern LLMs is flawless when analysing highly-nuanced feedback, especially when sarcasm is thrown into the mix. It’s fair to say that many humans struggle with this as well. LLMs outperform the competition here due to their greater flexibility and contextual understanding, but they can’t operate flawlessly.

Of course, as with the issues around bias and non-repeatability of results, a ‘trained’ LLM can outperform an ‘out the box’ LLM to such an extent that this isn’t an issue in day-to-day use.

Technology doesn’t make or break a CX project — execution does.

Beware vendors selling a “magic AI edge.” The history of the entire software industry to date shows that nobody has a technical edge, or at least not one that lasts for long. What matters to customers (and therefore your bottom line) is how well you apply insights, empower teams, and act on customer feedback.

Over the space of three years we’ve seen OpenAI move from being an unassailable market leader, to closely-matched by Anthropic, to seeing DeepSeek giving away a tool which can perform the same tasks as either at a tenth of the cost.

A CX platform which utilises any of these products, or their own “secret sauce” will do an exemplary job of classifying customer feedback by sentiment and topic. But on its own, that classification isn’t going to delight your customers, and it isn’t going to contribute to your bottom line.

What matters is the insights you derive from that feedback, how you act on those insights, and how you handle at-risk customers who have urgent needs you need to meet.

A fully-featured CX platform, like CustomerSure — or any of our competitors — is able to handle the technical side of feedback analysis. But if you’d benefit from a genuine CX partner, who will help you map journeys, work with stakeholders across the business, and gently challenge you with best practice, we can do that too.

Finally, now you know a lot more about how modern AI works, its pros and cons, and how to mitigate those cons, let’s look at what other CX teams are doing well. All these examples are based on the real-world experiences of the insight/CX teams we work with.

There’s no longer any excuse for customer data to remain silo’ed.

We work with teams who use CustomerSure to ingest data from touchpoints and channels across the whole business, slice that data in a way which reflects both customer segmentation and operational structure, and accurately analyse that data using powerful AI tools.

This structured analysis lets those teams demonstrate their value to the board by delivering with a whole-business view of satisfaction, and empower product/service owners with clear, relevant insights that they can act on to make customers’ lives better.

It’s not just Consumer Duty: Many firms are under pressure to demonstrate that they identify and act on vulnerable customers.

Rather than simple topic analysis, some of the best teams we work with also use AI to spot customers who may have characteristics of vulnerability, even if those customers don’t realise it themselves.

Of course, as we discussed, it would be wrong to let a LLM profile these customers without any right of rectification, but this does provide a valuable ‘amber flag’ that teams use to prioritise customers for intervention.

We’ve focused heavily on feedback analysis in this guide, but this is because it’s one of the most obvious use-cases for LLMs: tools which by their very nature turn text into numbers.

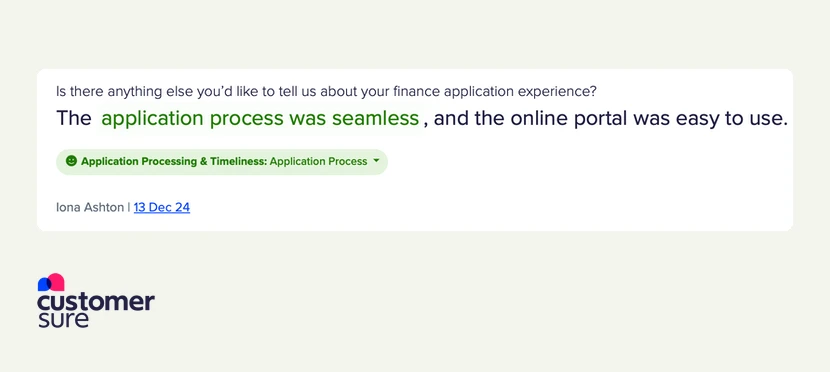

Teams we work with use our AI tools to scan feedback for overall, and per-topic customer sentiment. Once the feedback is enriched with this topic and sentiment data, teams can automatically spot negative feedback, and instantly send it to the right person in the business to fix things

Translation and Speech-to-Text are both examples of how LLMs can act as force-multipliers. Even when they serve highly-diverse customer bases (either globally, or in a multicultural region), not all CX teams are as well funded as they deserve to be.

Whilst still lagging behind true bilingual human translation, LLMs, and more specialised ML translators such as DeepL are inexpensive and deliver results which are often a lot better than ‘good enough’.

You wouldn’t use these tools to — for example — re-write critical safety or compliance text from English into another language, but they are more than capable of providing an accurate translation of what — for example — a Polish tenant is telling you in a customer survey, and understanding if it needs an urgent response.

It can be challenging to engage people in operational roles with CX initiatives. Most teams will be familiar with colleagues who truly care about customer satisfaction but don’t have the time to read every single customer comment to work out how to prioritise things.

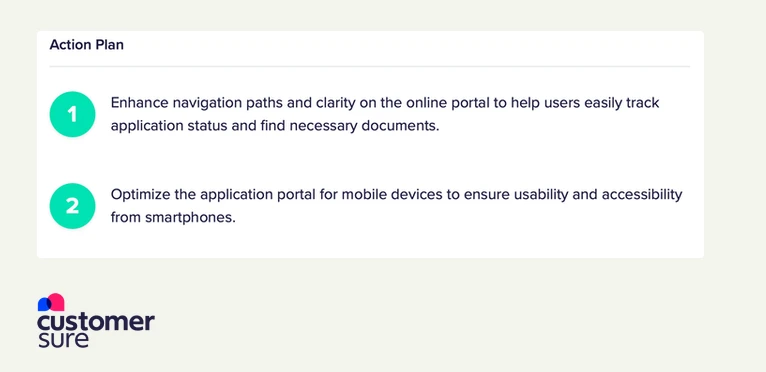

AI can produce short action plans to engage senior leaders in other areas of the business.

With good fine-tuning (i.e. when the AI understands your organisational structure well), these action plans can condense thousands of customer comments to a few actionable bullet points. Time saved on analysis gets spent on delighting customers.

Returning to the list of tasks that ChatGPT considers itself good at, it’s no surprise that we see CX teams using LLMs as tutors and coaches, with astonishingly good results.

When you take motivated, smart people (and most people in CX are nothing if not motivated and smart), and give them access to the sum of human knowledge through an interface that acts like a patient teacher, amazing things happen.

We’ve watched teams teach themselves basic coding and data analysis skills, learning to build in-house tools that integrate with CustomerSure that would ordinarily have a lead-time of months if they were routed through a centralised IT department.

This makes these CX teams more agile, and gives them greater job satisfaction: Rather than being held hostage by technology, they’re learning to use the tools they have to build better outcomes for customers.

Gain a clear view of how mature your VoC programme is, and receive tailored recommendations to take it to the next level.

Take the assessment »Connect with a CX expert who’ll help determine your current VoC programme maturity level and provide a 3-step action plan to improve.